Anomaly Detection

The Mathematization of the Abnormal in the Metadata Society

In this essay, media theorist Matteo Pasquinelli reflects on the return of the abnormal into politics as a mathematical object. Algorithmic vision is about the understanding of vast amounts of data according to a specific vector: it may be about common patterns of behavior in social media, suspicious keywords in surveillance networks, buying and selling tendencies in stock markets, or the oscillation of temperature in a specific region of the planet. The eye of the algorithm blindly records emerging properties and forecasts tendencies based on large data sets. Such procedures of computation are pretty repetitive and robotic and they generally operate along two main functions: pattern recognition and anomaly detection. The two epistemic poles of pattern and anomaly are two sides of the same coin of algorithmic governance. An unexpected anomaly can be detected only against the background of pattern regularity. And, conversely, a pattern emerges only through the median equalization of different tendencies. Here, mathematics resounds immediately as a new epistemology of power.

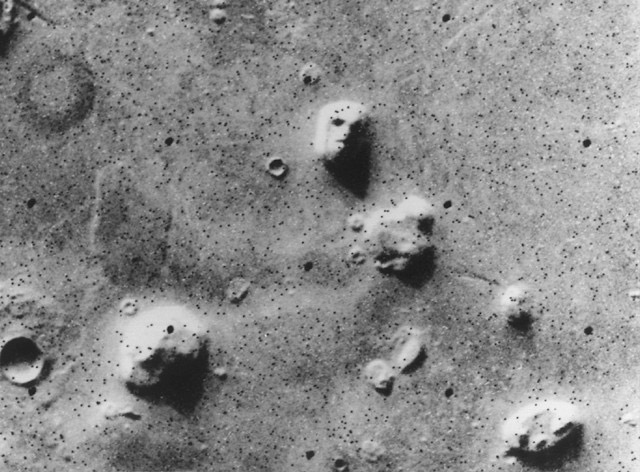

Canonical example of apophenia: a “human face” recognized on the surface of Mars. Image courtesy NASA, July 25, 1976

Introduction

In a book from 1890, the French sociologist and criminologist Gabriel Tarde was already recording the rise of information surplus and envisioning a bright future for the discipline of statistics as the new eye of mass media (that is, as a new computational or algorithmic eye, as we would say today). In his biomorphic metaphors, he wrote:

The public journals will become socially what our sense organs are vitally. Every printing office will become a mere central station for different bureaus of statistics just as the ear-drum is a bundle of acoustic nerves, or as the retina is a bundle of special nerves each of which registers its characteristic impression on the brain. At present Statistics is a kind of embryonic eye, like that of the lower animals which see just enough to recognise the approach of foe or prey.1

This quote can help to introduce three fields of discussion that are crucial in the post-Snowden age of data politics and algorithmic governance: first, as the reference to enemy recognition suggests, the realm of battlefields and warfare, military affairs and geopolitics, drones and forensics (as a counter-practice of activism). Second, this reference to the enemy brings us to the field of sociology and criminology, to the definition and institution of the “internal enemy” of society (that is, the abnormal, in the tradition of Canguilhem, Foucault, and biopolitical studies). Third, we see clearly an enemy also from the point of view of labor exploitation, according to which the worker is an anomaly to measure, optimize, and sometimes criminalize (as Marxism records). In these cases, of course, the position of the enemy, of the abnormal, of the antisocial individual as much as of the reluctant worker who falls under the eye of statistics and algorithms for data analysis can always be reversed and a new political subject can be described and reconfigured (as the research project Forensic Architecture has recently stressed, for example).2

A further evolution of that primitive eye described by Tarde—today’s algorithmic vision—is about the understanding of global data sets according to a specific vector. The eye of the algorithm records common patterns of behaviors in social media, suspicious keywords in surveillance networks, buying and selling tendencies in stock markets, or the oscillation of temperature in a specific region. These procedures of mass computation are pretty universal, repetitive, and robotic; nevertheless, they inaugurate a new scale of epistemic complexity (computational reason, artificial intelligence, limits of computation, etc.) that will not be addressed here.3 From the theoretical point of view, I will underline only the birth of a new epistemic space inaugurated by algorithms and the new form of augmented perception and cognition: what is called here “algorithmic vision.” More empirically, the basic concepts and functions of algorithmic vision and therefore of algorithmic governance that I will try to explain are pattern recognition and anomaly detection. The two epistemic poles of pattern and anomaly are two sides of the same coin of algorithmic governance. An unexpected anomaly can be detected only against the background of pattern regularity. Conversely, a pattern emerges only through the median equalization of diverse tendencies. In this way, I attempt to clarify the nature of algorithmic governance and the return of the issue of the abnormal in a mathematical fashion.4

1. The rise of the metadata society: from the network to the datacenter

As soon as the internet was born, the problem of its cartography immediately arose, but a clever solution (the famous Markov chains of the Google PageRank algorithm) did not arrive until three decades later. The first datacenter set up by Google in 1998 (also known as “Google cage”)5 can be considered a milestone in the birth of the metadata society, as it was the first database to start mapping the internet topology and its tendencies on a global scale. In the last few years, the network society has radicalized a topological shift: beneath the surface of the web, gigantic datacenters have been turned into monopolies of collective data. If networks were about open flows of information (as Manuel Castells used to say), datacenters are about the accumulation of information about information—that is, metadata.

These sorts of technological bifurcations and forms of accumulation are not new. The history of technology can be narrated as the progressive emergence of new collective singularities out of the properties of older systems, as Manuel DeLanda often describes in his works.6 A continuous bifurcation of the machinic phylum: labor bifurcated into energy and information, information into data and metadata, metadata into patterns and vectors, and so on … These bifurcations also engendered fundamental epistemic shifts: that is, for instance, the passage from industrial political economy to cybernetic mathematization and digitalization, and today to a sophisticated topology of datascapes. In fact, today, it is the emergence of a complex topological space that we are discussing with the idea of algorithmic governance and computational capitalism.

Specifically, metadata disclose the dimension of social intelligence that is incarnated in any piece of information. As I discussed earlier in an essay for Theory, Culture & Society, in mining metadata, algorithms are used basically for three things: first, to measure the collective production of value and extract a sort of network surplus value (as in the case of Google and Facebook business models and with logistic chains such as Walmart and Amazon); second, to monitor and forecast social tendencies and environmental anomalies (as in the different surveillance programs of the National Security Agency (NSA) or in climate science); and third, to improve the machinic intelligence of management, logistics, and the design of algorithms themselves (as is well known, search algorithms continuously learn from the humans using them).7

Datacenters are not just about totalitarian data storage or brute force computation: their real power relies on the mathematical sophistication and epistemic power of algorithms used to illuminate such infinite datascapes and extract meaning out of them. What, then, is the perspective of the world from the point of view of such mass algorithms? What does the eye of an algorithm used for data mining actually see?

2. A new epistemic space: the eye of the algorithm

Modern perspective was born in Florence during the early Renaissance thanks to techniques of optical projection imported from the Arab world, where they were first used in astronomy, as Hans Belting reminds us in a crucial book.8 The compass that was oriented to the stars was turned downwards and pointed towards the urban horizon. A further dimension of depth was added to portraits and frescos and a new vision of the collective space inaugurated. It was a revolutionary event of an epistemic kind, yet very political. Architects and art historians know this very well: it is not necessary to repeat it here.

When, in the 1980s, William Gibson had to describe cyberspace in his novels Burning Chrome and Neuromancer, he had to cross a similar threshold—that is, of interfacing the two different domains of perception and knowledge. How to render the abstract space of the Turing machines into a narrative environment? Cyberspace was not born just as a hypertext or virtual reality: from the beginning, it looked like an “infinite datascape.”9 The buildings of cyberspace were originally blocks of data, and if they resembled three-dimensional objects, it was only to domesticate and colonize an abstract space—that is, the abstract space of any augmented mind. We should read again Gibson’s locus classicus, to remember that the young cyberspace already emerged as a mathematical monstrosity. Gibson said of cyberspace:

A graphic representation of data abstracted from the banks of every computer in the human system. Unthinkable complexity. Lines of light ranged in the nonspace of the mind, clusters and constellations of data. Like city lights, receding.10

The intuition of cyberspace related to the meta-navigation of vast data oceans. The first computer networks just happened to prepare the terrain for the vertiginous accumulation and verticalization of information that would occur only in the age of datacenters. As Parisi reminds us in her book Contagious Architecture, the question is how to describe the epistemic diversity and computational complexity inaugurated by the age of algorithms. On this issue, she also quoted the architect Kostas Terzidis, who wrote the book Algorithmic Architecture:

Unlike computerization and digitization, the extraction of algorithmic processes is an act of high-level abstraction […] Algorithmic structures represent abstract patterns that are not necessarily associated with experience or perception […] In this sense algorithmic processes become a vehicle for exploration that extends beyond the limit of perception.11

As a provisional conclusion we may say that cyberspace is not the internet—cyberspace is the datascape used to map the internet and that is accessible only in secret facilities that belong today to media monopolies and intelligence agencies. Cyberspace should therefore be described as the second epistemic scale of the internet, not the first epistemic scale—that is, the scale of our everyday experience of it and of digital interfaces.

3. Algopolitics: pattern recognition and anolmay detection

In an interview with Wire magazine, Edward Snowden revealed an artificial intelligence system allegedly employed by the NSA to pre-empt cyberwar by monitoring internet traffic anomalies. This program is called MonsterMind and apparently is designed to “fire back” at the source of a malicious attack without human supervision.12 Tarde’s initial quote on statistics as a biomorphic eye to detect enemies was prophetic—a prophecy we can extend to supercomputers: “Statistics is a kind of embryonic eye, like that of the lower animals which see just enough to recognise the approach of foe or prey.”

“Anomaly detection” is a technical term in data analysis that has recently become a buzzword in business solutions of any kind, together with another technical term—“pattern recognition.” What does an algorithm see when it looks at a datascape? The only way to look at vast amounts of data is to track patterns and anomalies. Despite their different fields of application, from social networks to weather forecasting, from war scenarios to financial markets, algorithms for data mining appear to operate along two universal functions: pattern recognition and anomaly detection.

What, then, is pattern recognition? It is the recognition of similar queries emerging in search engines, similar consumer behaviors in a population, similar data regarding seasonal temperatures, the rise of something meaningful out of a landscape of apparently meaningless data, the rise of a gestalt against a cacophony? It is what DeLanda, more precise in this than others, describes as the emergence of new singularities. On the other side, anomalies are results that do not conform to a norm. What is an anomaly? It is what Grégoire Chamayou has described in his book A Theory of the Drone, in relation to military decisions about bombing and killing specific human targets just on the basis of metadata analysis when an anomaly of behavior emerges. In general, the unexpected anomaly can be detected only against a pattern regularity. And, conversely, a pattern emerges only through the median equalization of diverging tendencies. Anomaly detection and pattern recognition are the two epistemic tools of algorithmic governance. Mathematics (or, more precisely, topology) emerges here as the new epistemology of power. As a new epistemology of power yet to explore.

Another program, this one from the Defense Advanced Research Projects Agency (DARPA), started in 2010, is probably much more interesting to clarify algorithmic governance. It is called ADAMS: Anomaly Detection at Multiple Scale.13 But this one is somehow public and so it attracts less curiosity. This program is currently used for the detection of threats by individuals within a military organization, and its application to society as a whole can be much more nefarious than MonsterMind. Curiously, it has been developed to forecast the next Edward Snowden case, the next traitor, or to guess who will be the next crazy sniper shooting his mates out of the blue back from Iraq or Afghanistan.

How does it work? Once again, the algorithm is designed to recognize patterns of behavior and detect anomalies diverging from the everyday routine, from a normative standard. ADAMS is supposed to identify a dangerous psychological profile simply by analyzing email traffic and looking for anomalies. This system is promoted as an inevitable solution for human resources management in crucial organizations such as intelligence agencies and the army. But the same identical system can be used (and is already used) to track social networks or online communities, for instance, in critical geopolitical areas. Anomaly detection is the mathematical paranoia of the Empire in the age of big data.

The two functions of pattern recognition and anomaly detection are applied blindly across different fields. This is one of the awkward aspects of algorithmic governance. An interesting case is the software adopted by the Los Angeles Police Department (LAPD) and developed by a company called PredPol, founded by Jeffrey Brantigham, an anthropologist, and George Mohler, a mathematician. The algorithm of PredPol is said to guess two times better than a human being in which block of Los Angeles a petty crime is likely to happen. It more or less follows the “broken window” theory based on decades of data collected by the LAPD.

What is surprising is that the mathematical equations developed to forecast earthquake waves along the San Andreas fault are also applied to forecast patterns of petty crime across Los Angeles. This gives you an idea of the universalist drive of algorithmic governance and its weird political mathematics: it is uncanny, or maybe not, to frame crime as a sort of geological force. But perhaps it means much more pride for organized and not-so-organized crime to be compared to an earthquake rather than to the emergent intelligence of slime mold.

4. The mathematization of the abnormal

In a recent essay for e-flux journal, the artist Hito Steyerl recalled the role of computation in the making and perception of everyday digital images.14 Computation entered the domain of visibility some time ago: as we know, all digital images are codified by an algorithm and algorithms intervene to adjust definition, shapes, and colors.

Aside from this productive role of algorithms, we can also trace a normative one. One of the big problems of media companies such as Google and Facebook, for instance, is how to detect pornographic material and keep it away from children. It is a titanic task with some comical aspects. Steyerl found that specific algorithms have been developed to detect specific patterns of the human body and their unusual combination in positions that would suggest that something sexual is going on. Body combinations are geometricized to recognize reassuring patterns and detect offensive anomalies.

Some parts of the human body are very easy to simplify in a geometric form. There is an algorithm, for instance, designed to detect literal “ass holes,” which are geometrically very simple, as you can imagine. Of course, the geometry of porn is complex and many “offensive” pictures manage to skip the filter. In general, what algorithms are doing here is normalizing the abnormal in a mathematical way.

According to Deleuze, Foucault explored with his idea of biopolitics the power relation between regimes of visibility and regimes of enunciation.15 Today, the regime of knowledge has expanded and exploded towards the vertigo of augmented and artificial intelligence. The opposition between knowledge and image, thinking and seeing, appears to collapse, not because all images are digitalized—that is to say, all images are turned into data—but because a computational and algorithmic logic is found at the very source of general perception. The regime of visibility collapses into the regime of computational rationality. Algorithmic vision is not optical; it is about a general perception of reality via statistics, metadata, modeling, mathematics. Whereas the digital image is just the surface of digital capitalism, its everyday interface and spectacular dimension—algorithmic vision—is its computational core and invisible power.

Canguilhem, Foucault, Deleuze and Guattari, the whole of French poststructuralism and postcolonial studies have written about the history of abnormality and the always political constitution of the abnormal. The big difference with respect to the traditional definition of biopolitics as the regulation of populations is that, in the society of metadata, the construction of norms and the normalization of abnormalities is a just-in-time and continuous process of calibration. Bringing Foucault to the age of artificial intelligence, we can say that, after the periodization based on the passage from the institutional law to the biopolitical norm, we are now entering what we could provisionally define as the age of pattern recognition and anomaly detection—where collective behavior becomes a mathematical vector. It is my opinion that the traditional notion of the abnormal is now reentering the history of governance and philosophy of power in a mathematical way, as an abstract and mathematical vector. Power in the age of algorithmic governance is about steering along these vectors and navigating an ocean of data by recognizing waves of patterns, and, in so doing, by taking a decision whenever an anomaly is encountered, taking a political decision when a thousand anomalies raise their heads and make a new, dangerous, pattern emerge on the global landscape.16

5. The anomaly of the common

Gabriel Tarde, from whom we read the initial quote, had a particular interest in the imitative behavior of crime, in the way in which crime patterns spread across society. Nevertheless, another aspect of Tarde’s research was his focus on the cooperation and imitation between brains: the way in which new patterns of knowledge and civilization emerge, as Maurizio Lazzarato has also reminded us.

William Gibson had already dedicated to the issue of pattern recognition his homonymous novel in 2003. As we know, this fundamental capacity of perception and cognition was also investigated by the Gestalt school in Berlin a century ago. Interestingly, Gibson brings pattern recognition to the full scale of its political consequences. “People do not like uncertainty,” he wrote. One of the basic drives of human cognition is to fill the existential void by superimposing a reassuring pattern—never mind if it is done under the guise of a conspiracy theory, as happened after 9/11.17

Specifically, Gibson’s novel engages with the constant risk of apophenia. Apophenia is the experience of seeing patterns or connections in random or meaningless data, in the most diverse contexts, including in gambling and paranormal phenomena. It is when religious images are recognized in everyday objects or humanoid faces on the surface of Mars (see the canonical picture of the Cydonia region of Mars taken by the NASA spacecraft Viking 1 on July 25, 1976).

I argue that, as in the case of the humanoid face on Mars, algorithmic governance is apophenic too, a paranoid recognition and arbitrary construction of political patterns on a global scale. Indeed, there is an excessive belief in the almighty power of algorithms, in their efficiency, and in the total transparency of the metadata society. The embryonic eye of the algorithm—algorithmic vision—is growing with difficulties. For different reasons. First of all, due to information overflow and the limits of computation, algorithms always have to operate on a simplified and regional set of data. Second, different mathematical models can be applied and results may vary. Third, in many cases, from military affairs to algotrading and web ranking, algorithms often influence the very field that they are supposed to measure. An example of a non-virtuous feedback loop, algorithmic bias is the problematic core of algorithmic governance. As Parisi has underlined, aside from extrinsic limits, the regimes of computation have to cope with specific intrinsic limits, such as the entropy of data, randomness, and the problem of the incomputable. The eye of the algorithm is always dismembered, like the eye of any general intelligence.

An ethics of the algorithm is yet to come: the problem of algorithmic apophenia is one of the issues that we will discuss more often in the next years, together with the issues of the autonomous agency and epistemic prosthesis of algorithms and all their legal consequences. Apophenia, though, is not just about recognizing a wrong meaning out of meaningless data; it may be about the invention of the future out of a meaningless present. Creativity and paranoia sometimes share the same perception of a surplus of meaning. Political virtue, then, in the age of algorithmic governance relates to the perception of a different future for information surplus and its epistemic potentiality. Aside from the defense of privacy and the regulation of the algorithmic panopticon, other political strategies must be explored. We need perhaps to invent new institutions to intervene at the same scale of computation as governments, to reclaim massive computing power as a basic right of “civil society” and its autonomy. This is the data activism for the data politics of the future.

I would like to conclude by going back to the issue of enemy recognition and the perspective of the world from the eye of the algorithm. In a short chapter titled “Algorithmic Vision,” Eyal and Ines Weizman stress that “the technology of surveillance and destruction are the same as those used in forensics to monitor these violations.”18 The practice of the Forensic Architecture project has shown in different cases that the same technologies that are involved in war crimes as apparatus of vision, control, and decision can be reversed to become a political tool. In the same way, we may say that the same technologies that are used for pattern recognition and anomaly detection on the geopolitical and geo-economical scale can be reversed and repurposed. We could extend the same approach to the technosphere in general and imagine a different political usage and purpose for mass computation and global algorithms. Humankind has always been about an alliance with alien forms of agency: from ancestral microbes to artificial intelligence. A progressive political agenda for the present is about moving at the same level of abstraction as the algorithm—in order to make the patterns of new social compositions and subjectivities emerge. We can and we have to produce new revolutionary institutions out of data and algorithms. If the abnormal returns to politics as a mathematical object, it will have to find its strategy of resistance and organization, in the upcoming century, in a mathematical way.