The Radioactive Footprint of the Anthropocene

Assessing the effects of artificial radioactivity on human bodies and natural environments has a special place in the history of risk regulation. Angela Creager shows how studies of the effects of (low-dose) radiation exposure on ecosystems and the food web moved ecologists, geneticists, and biomedical researchers to create tools for researching, assessing, and regulating the health and environmental impacts of toxic chemicals and other contaminants. This, in turn, has provided a key basis, and an epistemological reckoning, for understanding and defining the anthropogenic danger to life on Earth.

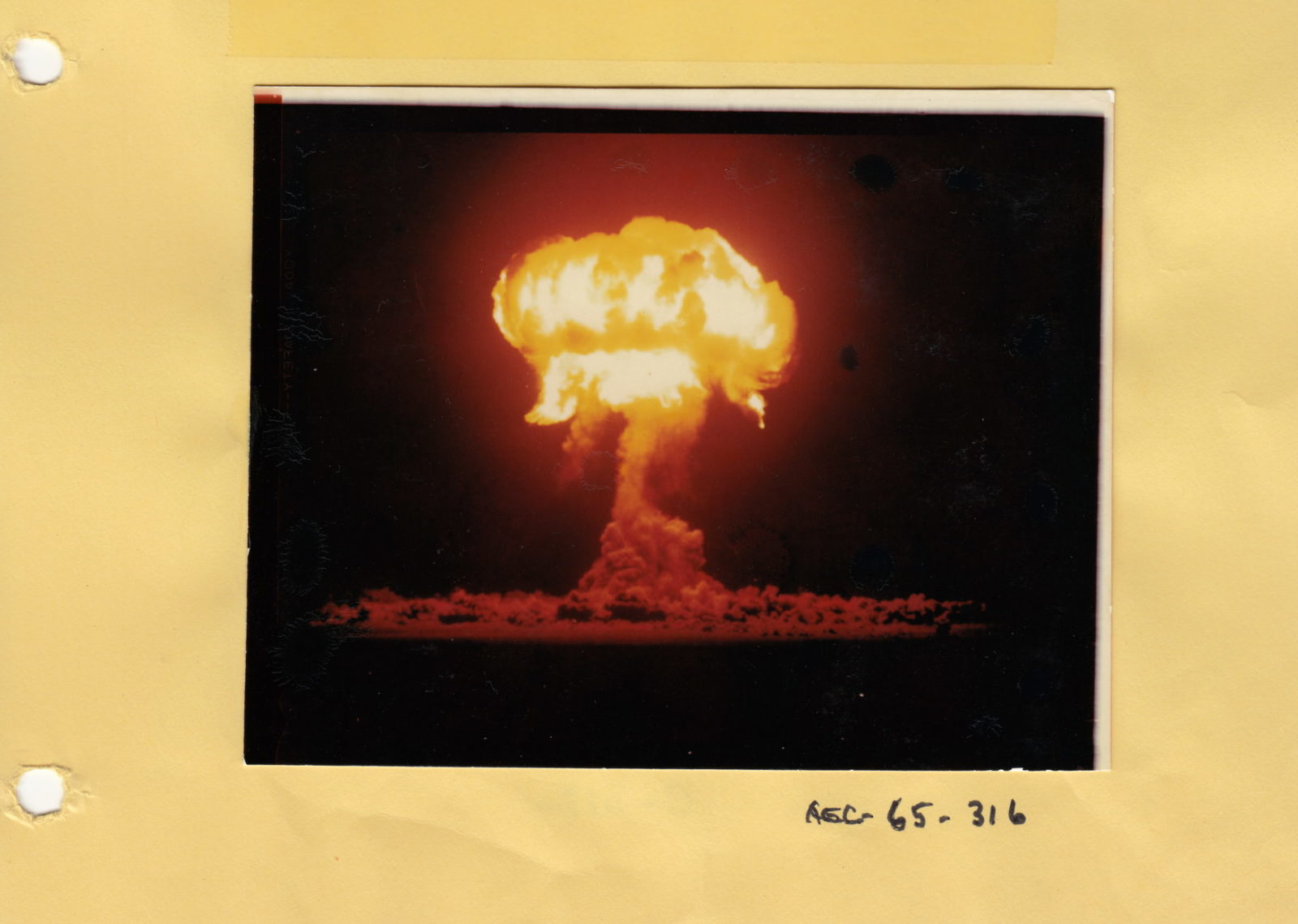

The various anthropogenic markers under consideration reflect different scientific specialties or ways of knowing the global environment.1 Each offers a distinct view of how humans have, quite literally, changed the world. My contribution argues that the nuclear Anthropocene has guided how scientists and citizens understand the manifold residues of human activities in the world.2 Scientific investigations of the atomic nucleus, as well as of radioisotopes, go back to the early twentieth century, but the attempt to master nuclear fission for weapons during World War II and the Cold War took the science and technology of the atom to a new and more violent scale. (See below.) The recognition of Earth’s global environment nucleated from the anthropogenic conditions of the atomic age.3 Other scholars have traced the broader contours of this complex legacy.4 I will focus on how problems associated with artificial radioactivity led ecologists, geneticists, and biomedical researchers to understand environmental contamination and low-dose exposures.5 These concepts and tools subsequently shaped the science and regulation of toxic chemicals and other human-generated environmental contaminants.6

Radioecology

To start on familiar ground, the military development of atomic energy (first by the US) resulted in the generation of many long-lasting radioelements not naturally present on Earth, such as plutonium, as well as a wide variety of fission byproducts, most radioactive isotopes. Many of these radioisotopes were of toxic chemical elements, and their half-lives and biological effects were not well-understood. Even as the Manhattan Project began to do secret research on these radioisotopes, they were already contaminating the planet. Nuclear waste from the production of fissionable material and fallout from the above-ground detonation of hundreds of atomic weapons dispersed radioactive elements throughout the world from 1943 to 1963.7

Although the US bomb project was shrouded in secrecy, radioactivity from detonation of the first nuclear device and waste from the production of plutonium betrayed its existence. In New Mexico after the Trinity blast, livestock on nearby ranches suffered from radiation exposure, with skin burns, hair loss, and bleeding.8 (Ranchers were also exposed though did not show immediate effects.) In August 1945, employees at a Kodak production plant noticed exposure spots on their photographic film, providing another kind of evidence of radioactivity from the top-secret nuclear test blast.9 The US government tried to prevent information breaches through its stringent national security apparatus, but the Army realized it could not censor the natural environment. Despite this, Manhattan Project officials often denied civilian observations of its activities and disclaimed any responsibility for their negative effects. In a few cases, such as with Kodak, the government brought civilians into its regime of secrecy to prevent further disclosure. For reasons related to both national security and human safety, studying the environmental movement and biological effects of radioactive materials being released by the atomic bomb project became vital (if secret) topics for investigation by the Manhattan Project and subsequently by its postwar successor, the Atomic Energy Commission.

In Hanford, Washington, the Manhattan Project contracted with fisheries researcher Laurel Donaldson of University of Washington to investigate the effects of radioactivity on aquatic life.10 The salmon industry along the Columbia River, into which cooling water from the Hanford reactors would be dumped, was worth eight to ten million dollars per year.11 In addition, the river was a source of drinking water for local populations.12 Any impact on fish (or people) could alert locals to the nature of secret military activities on the Hanford Reservation.

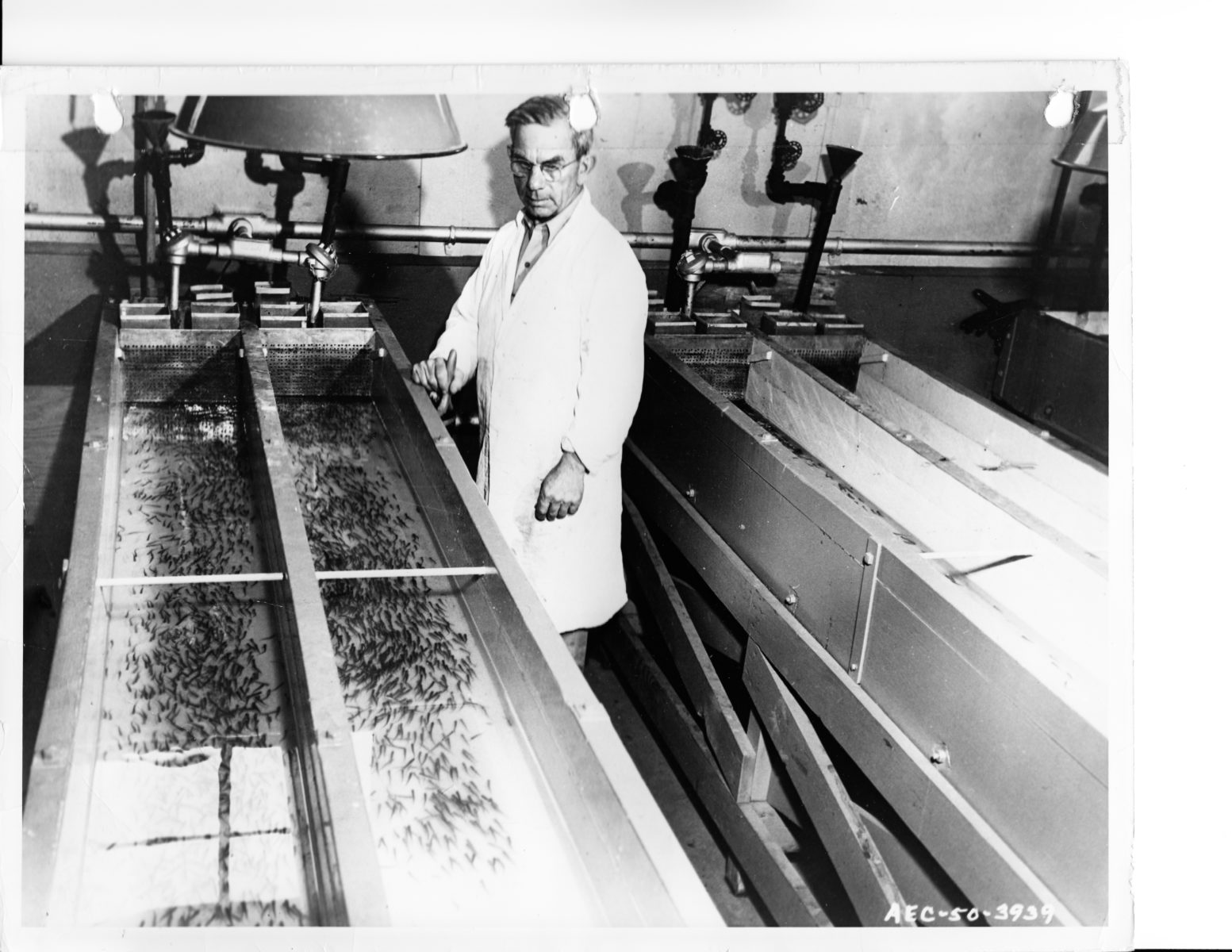

Donaldson and his coworkers demonstrated that fish, like mammals, suffer from radiation sickness, and that exposure to sub-lethal doses of ionizing radiation caused birth defects in salmon. These studies were done in the Applied Fisheries Laboratory in Seattle, but Donaldson argued for the need for research on site at the Columbia River as well. An Aquatic Biology Laboratory was established at Hanford in June 1945 to study the effects of cooling water from the nuclear reactors (called effluent) on salmon and trout at various stages of development. Hanford scientists also studied the levels of radioactivity in the water, and in fresh water organisms. (See below.) In 1946, they found that fish concentrate radioactivity in their bodies (especially liver and kidneys) following ingestion; in the river this involved radioisotopes of naturally-occurring minerals and other elements that were irradiated as the water cooled the reactors. Follow-up studies showed a concentration of up to several thousand-fold of these radioelements in the fish bodies over levels in the river water.13

A researcher at AEC’s Hanford Aquatic Biology Laboratory, standing next to the tank of developing fish used in radiation experiments. Courtesy US National Archives. RG 326-G, box 2, folder 2, AEC-50-3939, public domain Hanford scientists collecting plankton in nets to study the movement of radioactivity through Columbia River marine life. A current meter is being used to measure the rate of flow of the river. Photo of Hanford, Washington. Courtesy US National Archives. RG 326-G, box 2, folder 2, AEC-50-3938, public domain

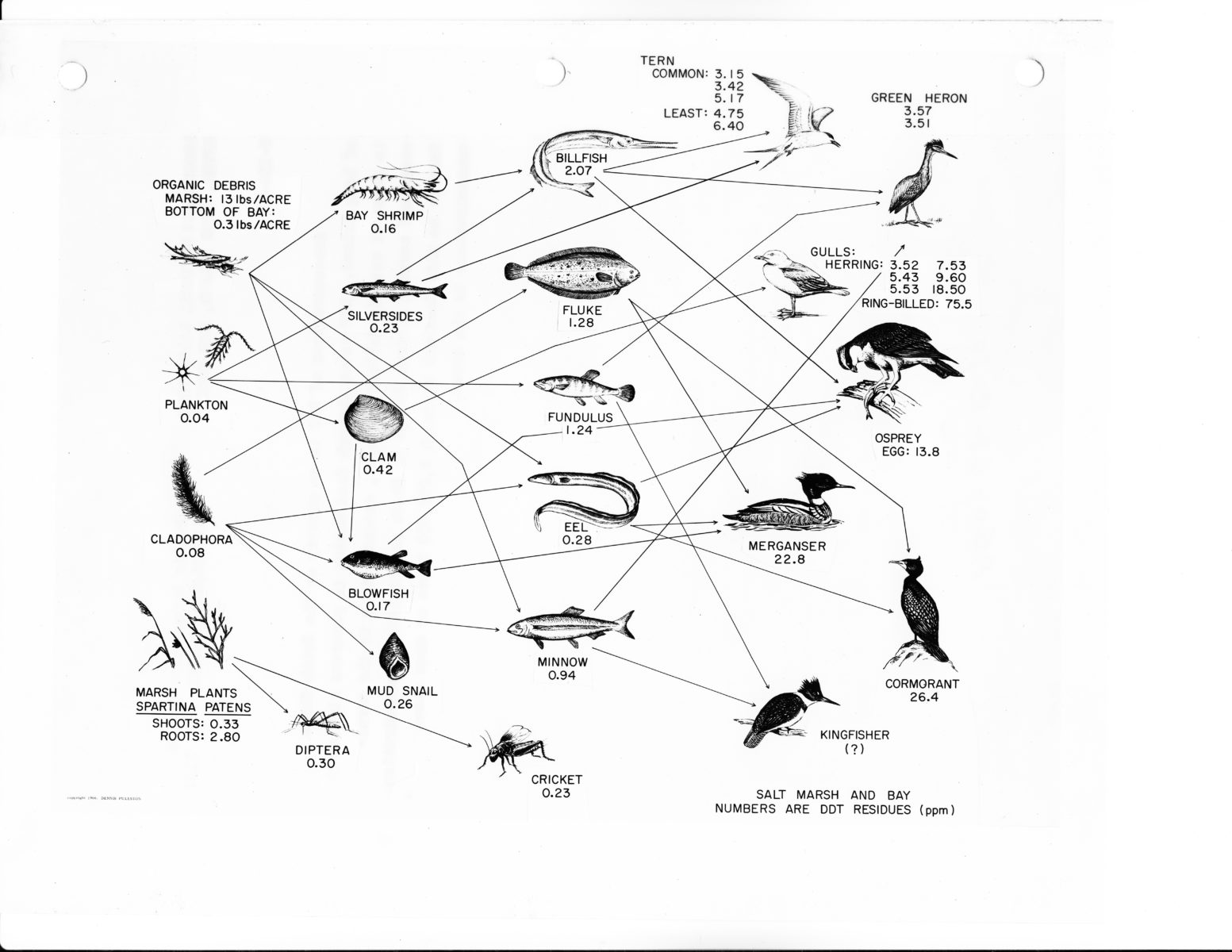

The radioactivity was also moving between organisms. Already in 1947, a report circulating within the Atomic Energy Commission (AEC) mentioned the “transfer of radioactive material in ‘food chains’” from plankton to fish.14 Hanford scientists did not publish these findings in the open literature until the mid-1950s, by which time ecologists elsewhere had begun documenting similar effects.15 By the 1960s, environmental scientists began considering the behavior of chemical contaminants as similar to that observed with radioisotopes. At the AEC’s Brookhaven National Laboratory, George Woodward and coworkers investigated how DDT moved through ecosystems and entered food webs, finding that it showed the same pattern of bioconcentration through the food web that had been observed earlier with certain radioactive elements.16

Original caption reads “Concentrations of DDT in an estuary on the South Shore of Long Island. DDT moves through food chains in patterns similar to those of certain fallout isotopes, concentrating in the carnivores and causing serious damage to these populations. Concentration factors in this food web approach 1,000,000 times or more above concentrations in the water.” Courtesy Brookhaven National Laboratory. US National Archives, RG 434-SF, box 15, folder 4, AEC-68-8289, public domain

It was not just this disturbing behavior of bioconcentration, but also the broader attention to the hazards of invisible, low-level contaminants that was part of the nuclear legacy. As Woodwell put it, the attention to one part per billion in the environment “in itself was a revolution,” and the realization that biotic studies required measurement in the “range of nanograms and picograms, nanocuries and picocuries” became a defining feature of environmental science.17 As ecologists F. Ward Whicker and Vincent Schultz observed, when it came to studying processes involved in the spread of “smog, pesticides, and other chemical substances that may threaten the environment,” radioisotopes were a “model pollutant.”18

Ionizing radiation as a health hazard

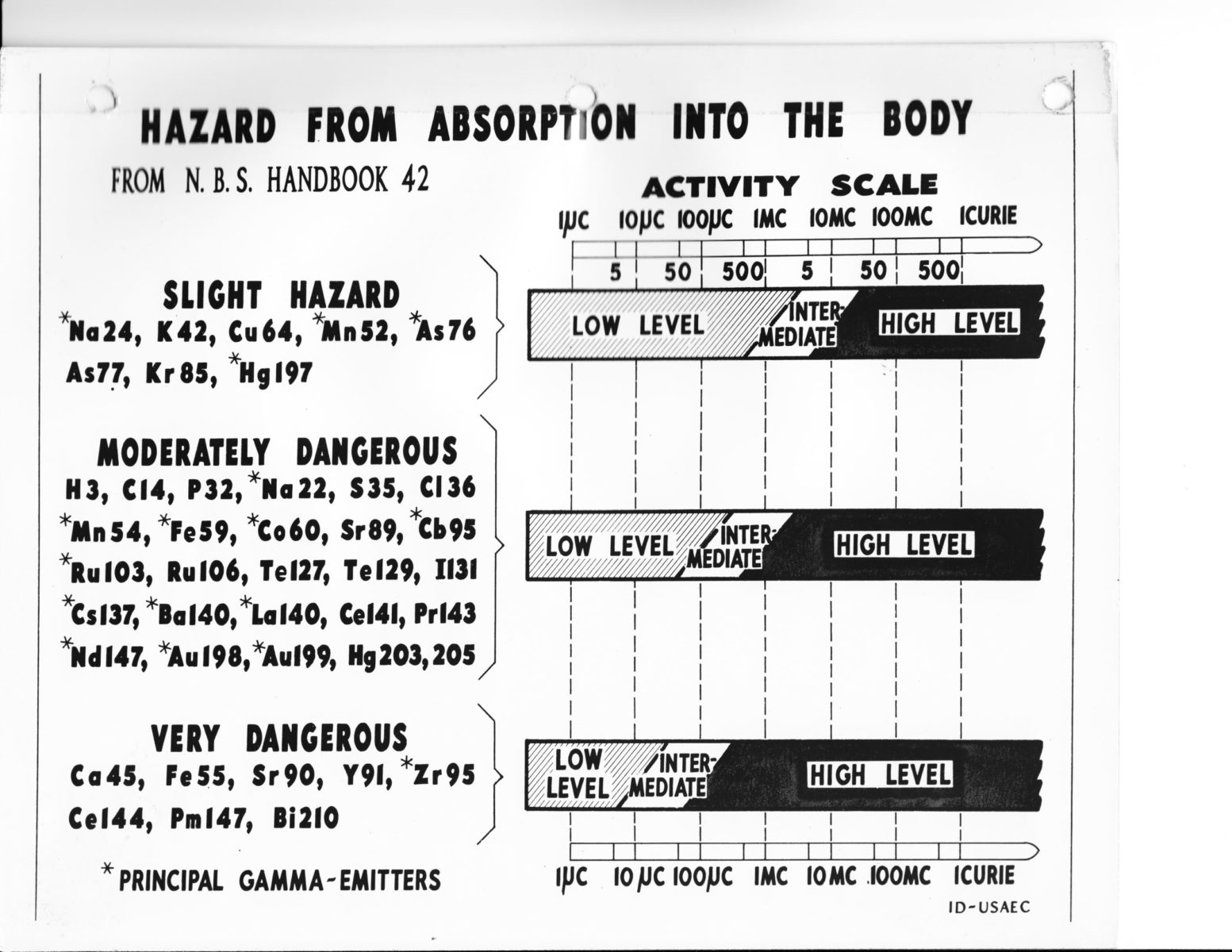

At the same time, studies of the biological effects of radioactivity shed light on the health consequences of low-dose, long-term exposure. During and after the Manhattan Project, radiological safety drew on a toxicological framework that assumed the existence of an exposure dose below which health hazards would be low to negligible.19 (See below.) Admittedly, some experiments, particularly on the genetic effects of radiation, suggested that such a threshold did not exist. In 1946, the National Committee on Radiological Protection changed the term “tolerance dose” to “permissible dose” in recognition that the limits specified by its recommendations might not be certifiably safe.20 Nonetheless, health physicists and government officials operated on the assumption that hazards from radiation exposure could be safely managed. At the Oak Ridge Institute of Nuclear Studies, the US government offered researchers training courses in handling and measuring radioactivity. In 1955, as part of the Atoms for Peace initiative, these were opened up to foreign workers as well (though generally not to those from Soviet-aligned countries).21 This was one of many international initiatives aimed at promoting the use of nuclear technologies, as well as the trust that they could be safe.22

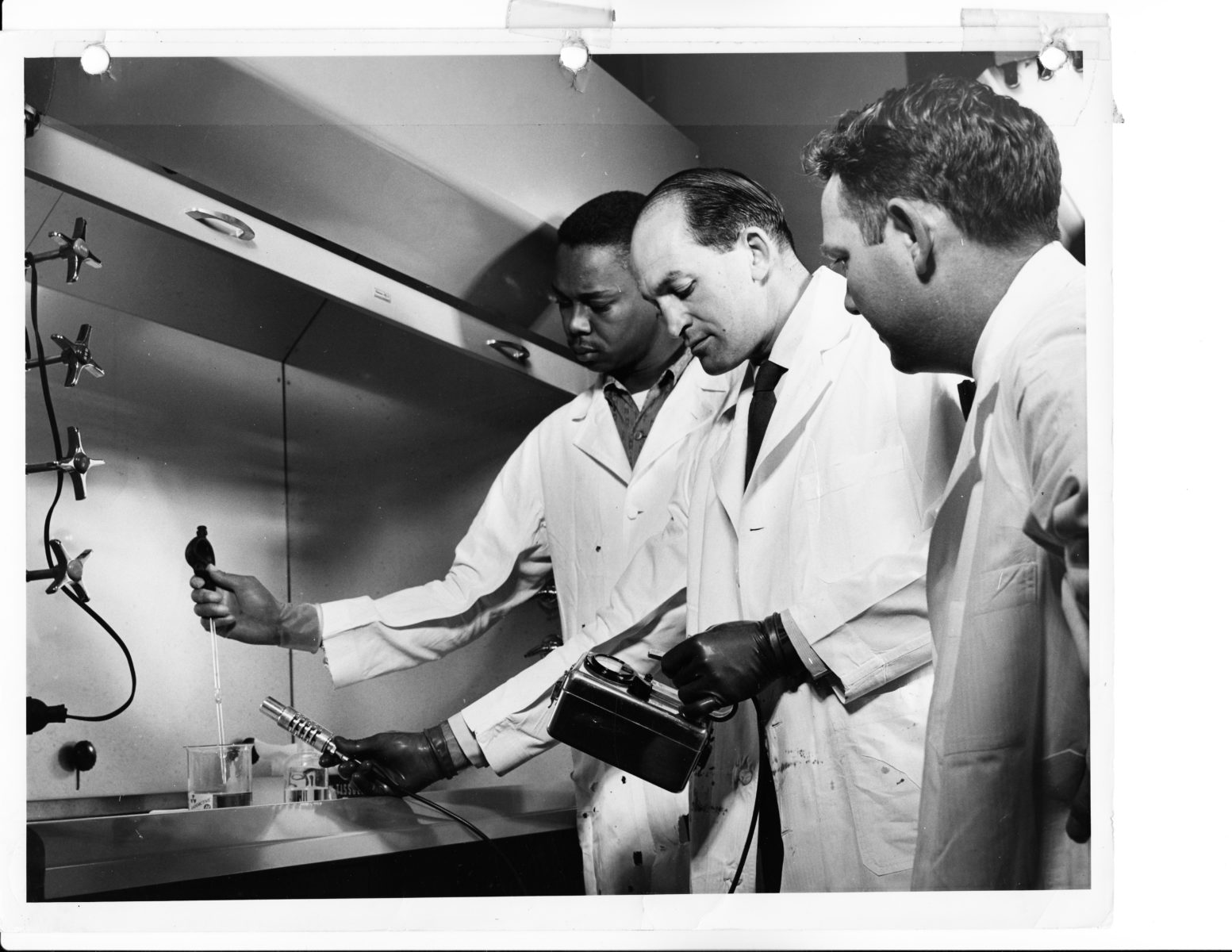

Schematic diagram depicting the hazards of different radioisotopes if absorbed into the human body. Adapted from National Committee on Radiation Protection, Safe Handling of Radioisotopes. National Bureau of Standards Handbook No. 42, 1949. US National Archives, RG 326-G, box 2, folder 2, public domain Original caption reads: “Provide Training for Increased Opportunity. Research workers learn the techniques of using radioisotopes under the watchful eye of a nuclear scientist at the Atomic Energy Commission’s isotope training facilities in Oak Ridge, Tennessee. Thousands of research workers from many nations across the world have learned how to use radioisotopes for application in their own fields such as agriculture, medicine and industry. This program is operated for the AEC by the Oak Ridge Institute of Nuclear Studies.” The title and inclusion of a Black researcher may well be in response to the US Civil Rights movement. Photograph taken by James E. Westcott, 1958. ORO 58-0455C, AEC-65-7535, US National Archives, RG 326-G, box 9, folder 4, public domain

Most feared among radiation’s dangers was cancer, for which there could be a long latent period after exposure. In the 1950s a group of geneticists, drawing largely on studies in radiation biology and medicine, argued that human cancers typically arose from mutations to somatic cells.23 This conception subverted the longstanding distinction among health physicists between so-called somatic effects of radiation (particularly cancer), for which a safety threshold was thought to operate, and genetic effects, which were known to exist at low exposure levels. Geneticists instead connected the mutagenizing ability of an agent such as radiation to its role in cancer. Because there appeared to be no threshold for genetic damage, even low-level exposures might induce cancer-producing somatic mutations.24 In addition, the “multi-stage model” of carcinogenesis proposed by Peter Armitage and Richard Doll in 1954 treated cancer as the result of not just one, but several, cellular events.25 These events could be mutations, such as those induced by radiation. In the 1960s, biochemists and biophysicists showed mutational damage by ionizing radiation to occur through specific kinds of DNA damage, giving the somatic mutation theory a clear mechanistic basis.26 In 1972, the National Academy of Science’s report on Biological Effects of Ionizing Radiation advised that the carcinogenic effects of ionizing radiation should be regarded as linearly dose-dependent, with no threshold below which damage did not occur.27

This understanding of the carcinogenicity of radiation, even at low dose, informed a parallel concern with exposure to synthetic chemicals in pollutants, pesticide residues, consumer products, and drugs. Chemicals, like radiation, were presumed to act at the level of DNA in causing mutations and potentially cancer.28 Many scientists turned their attention to harnessing their knowledge and techniques to detect carcinogens, with an eye towards regulatory testing. As Scott Frickel has shown, by the early 1970s genetic toxicology brought radiation and chemicals under the same umbrella of environmental mutagens, and this new field generated the research and expertise critical to new government regulation of these substances.29

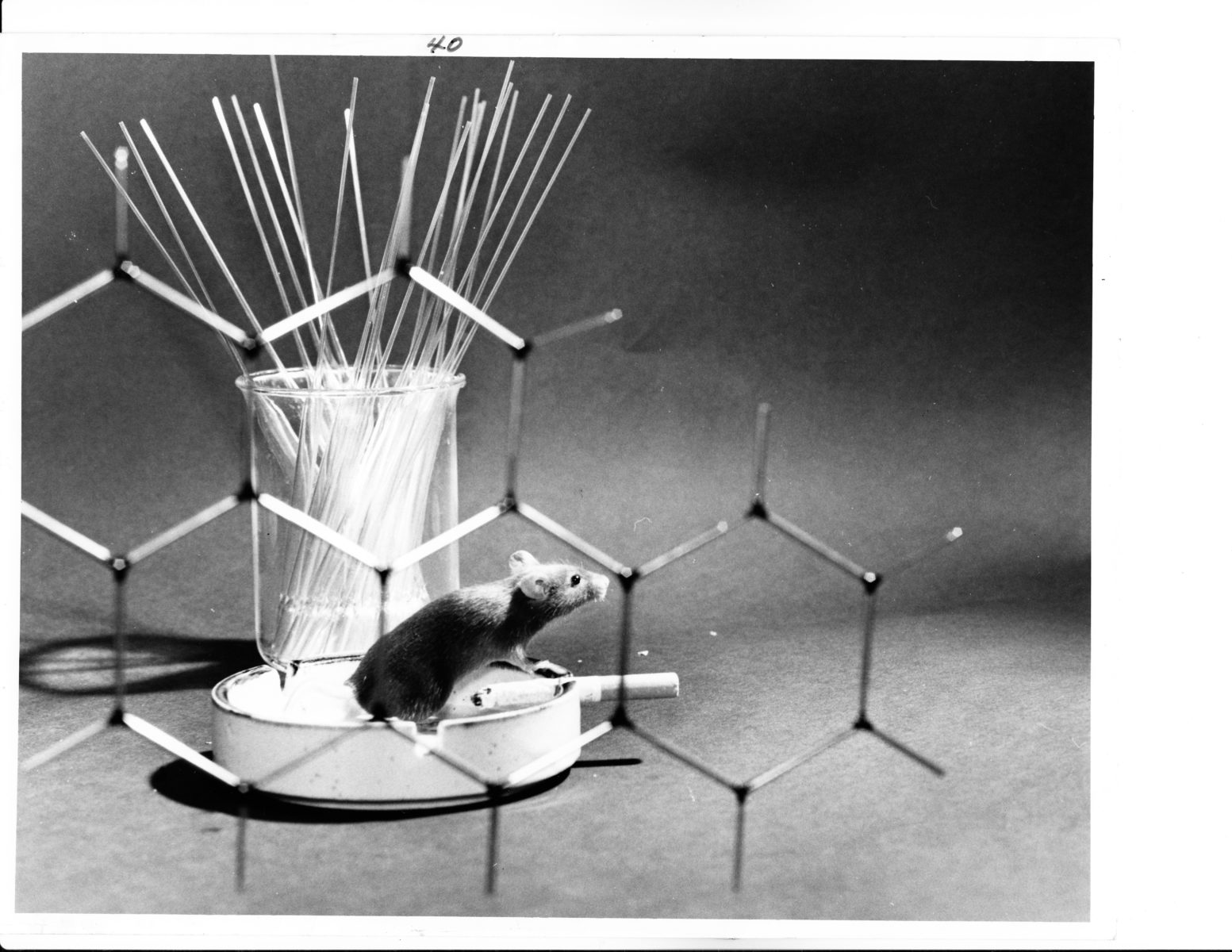

“Mouse Carcinogen.” In the late 1960s and 1970s, AEC researchers at Oak Ridge National Laboratory and other facilities focused on environmental carcinogens, which included many chemicals as well as ionizing radiation. The molecular model appears to be of benzo[a]pyrene, a prime carcinogen in cigarette smoke. 1972. Courtesy US National Archives. RG 434-SF, box 16, folder 3, public domain

In the US, federal agencies responded to the widespread belief that environmental agents were responsible for the majority of human cancers. In 1968, the National Cancer Institute launched a “Plan for Chemical Carcinogenesis and the Prevention of Cancers.” During the subsequent years the agency publicized an estimate that as much as 90 percent of human cancer was attributable to environmental agents.30 As Soraya Boudia has observed, radiation protection law, which in the US first provided a population-level exposure limit in 1958, put forth a risk-based framework for conceptualizing and controlling the health effects of low-level toxic chemicals.31

A life-threatening marker

Rachel Carson’s Silent Spring began with a comparison of toxic chemicals to radioactivity, extending the widespread alarm about exposure to hazardous radioactive fallout from weapons testing to include pollution from the petrochemical industry: “In this now universal contamination of the environment, chemicals are the sinister and little-recognized partners of radiation in changing the very nature of the world—the very nature of life.”32 In this sketch I have tried to illustrate how the related concerns about radiation and chemicals underlay the growth of environmental life science, even as it also informed social movements and political demands for action.

Historians have previously demonstrated the ways in which the atomic age ushered in global monitoring systems and computer modelling of the environment.33 Yet the catastrophic cost of anthropogenic change, as exemplified by climate change, is its terrible toll on living organisms.34 In this respect, an anthropogenic marker associated with the changing knowledge of life and how it is threatened by human activities seems especially suitable. The accumulation of human-made nuclear contamination has shaped not only our earthly environment, but also our epistemological reckoning with the ghastly aftereffects of our radioactive and chemical creations.

Angela N. H. Creager is the Thomas M. Siebel Professor in the History of Science at Princeton University. Her current book project, “Making Mutations Matter,” examines science and regulation in the 1960s through the 1980s, focusing attention on how researchers conceptualized and developed techniques for detecting environmental carcinogens.

Please cite as: Creager, A N H (2022) The Radioactive Footprint of the Anthropocene. In: Rosol C and Rispoli G (eds) Anthropogenic Markers: Stratigraphy and Context, Anthropocene Curriculum. Berlin: Max Planck Institute for the History of Science. DOI: 10.58049/zrb1-6e54