Monitoring the Nuclear Anthropocene

Knowledge of the onset of the Anthropocene is intimately connected to nuclear technoscience on the one hand and to the governance of environmental problems, such as atmospheric pollution, on the other. Historian of science Néstor Herran describes the historical roots of global environmental monitoring that helped frame the twin concepts of the Anthropocene and the “Great Acceleration.” He shows how the roots of global atmospheric monitoring go back to the demands and institutions of nuclear military surveillance, and the varied ways in which these new research practices and data infrastructures were deployed to assess the impact of radioactive fallout.

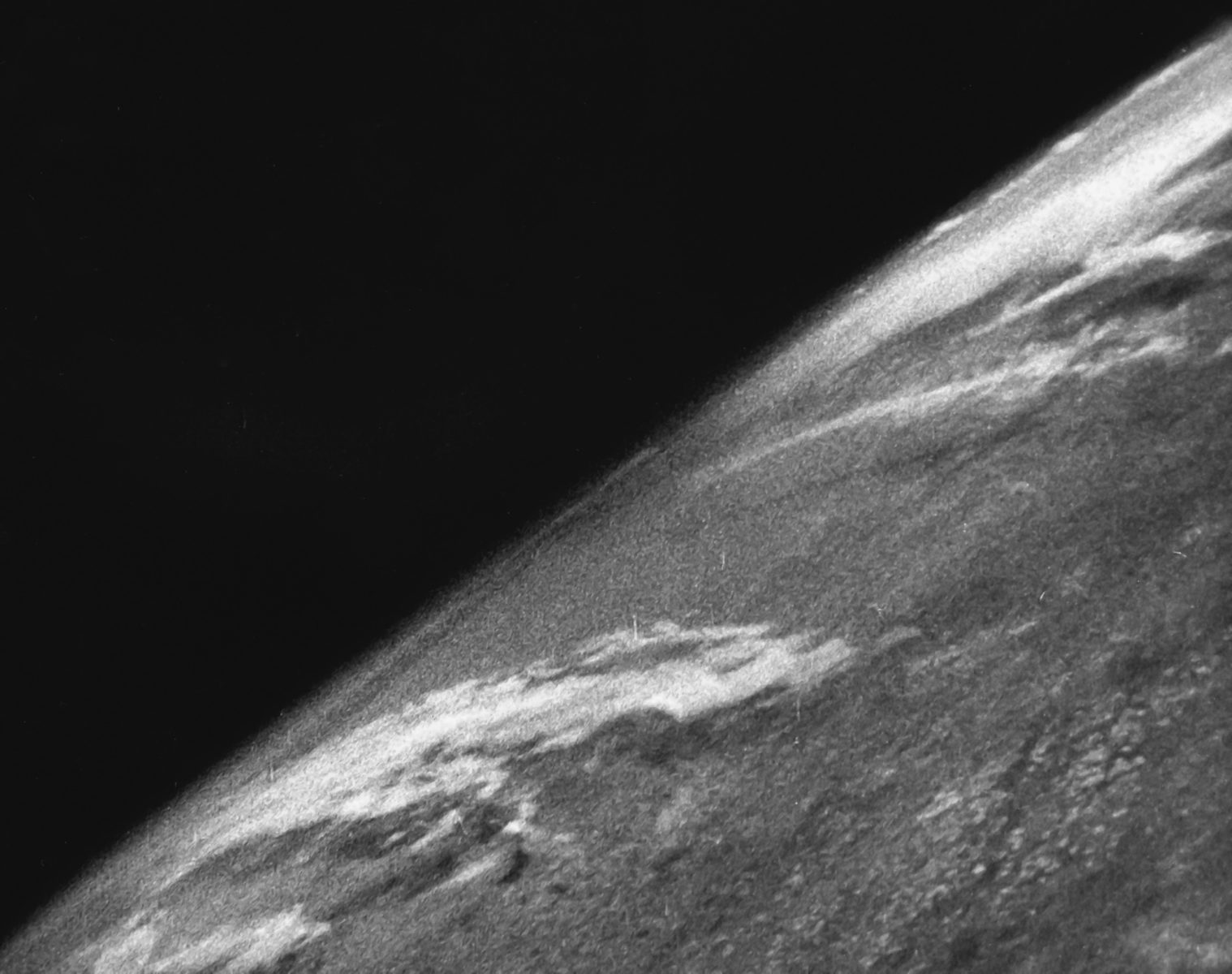

The first photograph taken from space on October 24, 1946 onboard the sub-orbital US-launched V-2 rocket at White Sands Missile Range. Courtesy White Sands Missile Range/Applied Physics Laboratory, from Wikimedia, public domain

The Anthropocene, both scientifically and culturally, has been shaped to a great extent by nuclear technoscience. Among the most conspicuous influences are the plethora of isotope analysis techniques used to search for candidate geological signatures to mark the start of the Anthropocene as a geological epoch.1 As are mid-twentieth-century nuclear apocalypse narratives, which have offered a template for later imaginaries of environmental collapse.2 Indeed, one can argue that the nuclear has framed the emergence of the Anthropocene as an object of knowledge, as an indicator of environmental disruption, as a source of political contestation regarding, and as an object of global governance.

The origin of these connections can be traced back to the late 1940s and 1950s, when the first networks to detect environmental radioactivity were built. Such networks—which can be considered the first global environmental monitoring infrastructures—played a significant role in the emergence of public controversies regarding the effects of radioactive fallout in the 1950s, as did their appropriation by the United Nations Scientific Committee on the Effects of Nuclear of Radiation (UNSCEAR), one of the first international expert committees to deal with global environmental problems.

The building of these data-producing infrastructures, which feed the models used to interpret the state of the environment and are synthesized for use in public discussion, foreshadowed the emergence of environmental monitoring,3 a “way of knowing” that has helped to define the idea of the Anthropocene and the “Great Acceleration” using the very practices of observing, modeling, and reporting embedded in the indicators produced and gathered by the International Geosphere-Biosphere Programme (IGBP).4

From military surveillance to the UNSCEAR

The first networks to measure environmental radioactivity were built as part of the US nuclear military programs of the late 1940s. Initially devised to detect nuclear activities in occupied Germany at the end of the Second World War, they were extended and improved to monitor Soviet nuclear activities after the war. Data gathered from the first series of atmospheric nuclear tests in the Marshall Islands (Operations Crossroads and Sandstone) helped build a surveillance system that was able to detect and locate the first Soviet atomic bomb in August 1949.5

At the same time, these massive military investments considerably improved the ability to detect low levels of radioactivity by means of electronic instruments (such as the Geiger counter) and advanced techniques of isotope analysis. To give an example, US Air Force contracts funded the team led by nuclear chemist (and later Nobel Prize laureate) Willard Libby at the Institute for Nuclear Studies at the University of Chicago. Libby’s team developed counters precise enough to produce the first assays on the distribution of carbon-14 and tritium worldwide. This data, presented as an efficient tool to monitor nuclear military activities worldwide, also aided in the design of the radiocarbon dating method.6

A Soviet DP-5B Geiger counter, designed for radiation measurement following a nuclear explosion. Photo courtesy Jozef Bogin Soviet DP-5B Geiger counter. Photo courtesy Jozef Bogin

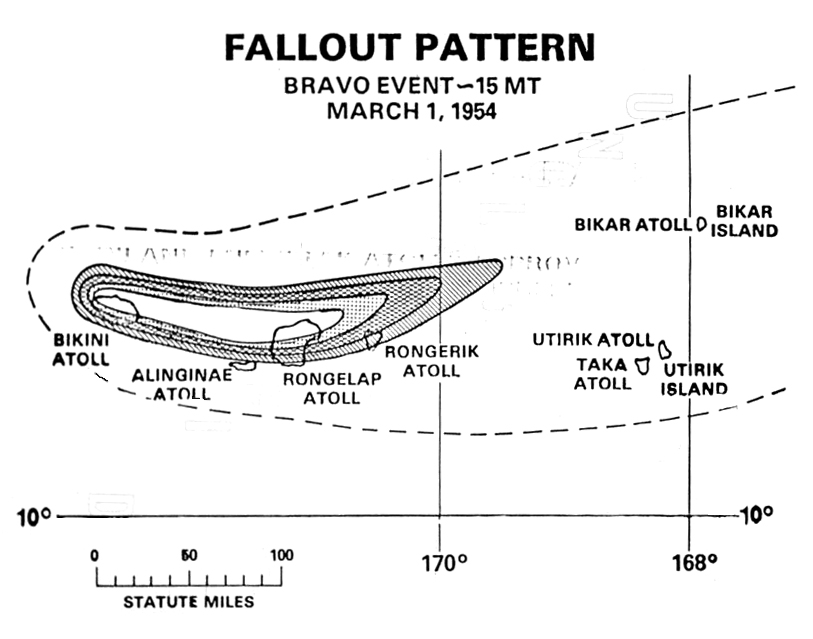

The development of the hydrogen bomb in 1952 introduced an unexpected turn to the situation. A thousand times more powerful than the fission bombs that destroyed Hiroshima and Nagasaki, hydrogen bombs produce higher amounts of radioactive fallout, too. A dramatic example of this is the Castle Bravo nuclear test of March 1954, the radioactive fallout from which contaminated the crew and cargo of Japanese fishing vessels operating in the Pacific Ocean. A public scare and international controversy ensued, compromising both American and Soviet nuclear strategies. This event is also what prompted the United Nations General Assembly to establish UNSCEAR, in 1956, to assess the effects of atmospheric fallout on human health and to help appease the public’s anxieties.7 Organized as a hybrid intergovernmental forum combining scientific experts and diplomats and tasked with producing reports to the General Assembly, UNSCEAR can be considered the first institution built around a global environmental pollution crisis. It has since served as a model for subsequent UN committees dealing with environmental issues.

The main concern around the radioactive fallout from nuclear tests was the radioisotope strontium-90, which is chemically similar to calcium and so can accumulate in the bones and cause leukemia. To assess this risk, the US Atomic Energy Commission (AEC) and the US-based RAND Corporation founded, in 1953, Project Sunshine to determine the global dispersion of strontium-90 by measuring its concentration in human bones sampled around the world. Directed by Libby, Project Sunshine produced data that, combined with measurements from fallout monitoring stations worldwide, helped calculate the global dose of exposure received by an “average human” due to radioactive fallout from nuclear tests. The first UNSCEAR report, issued in 1958, also used this data to create the first maps of the global distribution of strontium-90.8 These maps represent one of the first instances where the distribution of a pollutant was represented at a global scale.

Building infrastructures for radioactivity monitoring

The establishment of UNSCEAR happened contemporaneously with the launching of an initiative known as the International Geophysical Year (IGY, 1957–58).9 The IGY was conceived as an opportunity to collaboratively advance geophysical sciences by mobilizing radar, rocketry, and computer technologies produced during the Second World War. Major projects include the construction and launch of the first artificial satellites (the Soviet Union’s Sputnik I and II, in October 1957, and the US’s Vanguard I, in February 1958), the establishment of research stations in the Antarctica, the first direct measurements of cosmic rays, and the establishment of the first global meteorological databases.10

Inspired by fallout radioactivity networks, the IGY set the ground for systems of environmental monitoring that would later come to characterize environmental sciences, such as its nuclear radiation program, designed to measure anthropogenic radionuclides and subsequently use this data to trace meteorological, oceanographic, and ecological phenomena. A key actor in these initiatives was Roger Revelle, director of the Scripps Institution of Oceanography at the University of California San Diego and scientific head of the US delegation to the IGY. After serving in the US Navy during the war, Revelle participated in the 1946 nuclear tests in the Marshall Islands by studying the effects of the nuclear explosions on Bikini Atoll. In the 1950s, he consolidated relations between the navy and the Scripps Institution, whose staff, resources, and programs expanded dramatically as a result. A primary research subject was the exchange of carbon-14 between the ocean and the atmosphere. This was a very important question for Revelle not only for purely scientific reasons but also because he directed the Committee on the Effects of Atomic Radiation on Oceanography and Fisheries of the National Academy of Sciences, established to assess AEC plans to use the ocean as a sink for nuclear waste.11 This radiocarbon research became the framework for Revelle and Hans Suess’ seminal article on the limits of CO2 absorption by the oceans,12 which advanced the hypothesis that anthropogenic carbon dioxide was accumulating in the atmosphere.

Mauna Loa Solar Observatory, part of the atmospheric baseline research complex on the mountain, sometime after 1953. Photograph courtesy University Corporation for Atmospheric Research, from Wikimedia, public domain

The ensuing scientific and governmental interest in monitoring atmospheric CO2 led to the building of two stations to measure this greenhouse gas in the framework of the IGY: one in Antarctica and the second at the Mauna Loa Observatory in Hawaii. Using data from these stations, together with measures taken in the high atmosphere provided by the US Air Force, the chemist Charles Keeling produced the first curve representing the rise of atmospheric CO2.13 Since then, the monitoring system that Keeling developed at Mauna Loa has traced the increase of this greenhouse gas due to the combustion of fossil fuels, which is currently one of the main indicators of the Anthropocene and the Great Acceleration.14

The controversy surrounding radioactive fallout from nuclear tests was, of course, not restricted to the United States: stations were built around the world, with most industrialized nations establishing their own stations to monitor the fallout of atmospheric tests. The Soviets’ network to surveille American nuclear tests, built in 1949, included ground stations, meteorological balloons, and radioactivity measuring instruments affixed to planes that patrolled the eastern and western borders of the Soviet Union.15 In Europe, countries with advanced nuclear programs, such as the United Kingdom and France, established their own national monitoring networks as part of military projects, while other nations built this infrastructure as part of their meteorological services or through nuclear-related public institutions.

Throughout the 1950s and 1960s and onward, European radioactivity monitoring infrastructures remained largely uncoordinated, despite the fact that the Euratom Treaty of 1958 obliged member countries to establish systems for detecting environmental radioactivity and gave the European Commission the right to access these systems to verify their activity and efficacy. Following a failed initiative promoted by the Organisation for Economic Co-operation and Development to coordinate the exchange of this information in 1959, the only European systems actively monitoring environmental radioactivity were the ones run by the Joint Research Centre in Ispra, Italy, and the European contribution to the Global Network for Isotopes of Precipitation, established by the International Atomic Energy Agency in Vienna.

One explanation for the ultimately limited scope of these programs is related to the signing, in 1963, of the Partial Nuclear Test Ban Treaty, which ended US and Soviet nuclear testing in the atmosphere and greatly reduced radioactive fallout pollution around the world. However, the development and expansion of nuclear power reactors in the late 1960s and 1970s provoked new concerns, such as the increasing ocean disposal of radioactive waste. Contested by many scientists, fisher associations, and the emerging environmentalist movement, the dumping of nuclear waste in the ocean led to increased studies on the circulation of water and life in the deep ocean. These debates also contributed to the establishment of Global Environment Monitoring System for the Ocean and Coasts (GEMS/Ocean), set up in 1972 as an initiative under the Earthwatch program of the United Nations Environmental Programme. GEMS/Ocean established the collection of environmental data through global surveillance networks as a key element in the governance of international environmental problems.16

A technocratic spirit?

Institutions and scientific practices developed around nuclear technology have inspired and framed modern environmental monitoring and, consequently, our current understanding of the Anthropocene and particularly the Great Acceleration, a concept based on the continuous measuring and assessment of Earth system indicators. Scientific practices of observing, modeling, and reporting that were initially conceived as part of military surveillance came to generate and circulate within new fields of expertise and sociopolitical relations in the wake of environmental controversies. These practices, however, continue to carry with them their entanglements with the technocratic spirit of the Cold War and the nuclear complex. As such, it is worth asking ourselves to what extent this spirit frames and limits our current understandings of ecological problems, including, for example, foreshadowing the use of geoengineering and nuclear energy as technological fixes to the current environmental crises.

Néstor Herran is lecturer in the History of Science at Sorbonne University. His work focuses on the history of nuclear science and technology, computer science, geophysics and environmental sciences in the Cold War.

Please cite as: Herran, N (2022) Monitoring the Nuclear Anthropocene. In: Rosol C and Rispoli G (eds) Anthropogenic Markers: Stratigraphy and Context, Anthropocene Curriculum. Berlin: Max Planck Institute for the History of Science. DOI: 10.58049/qqwk-my29